0 - Intro

A (single layer single output) perceptron is a function

if

The idea, is

let

We have shown that injective activations product linear decision boundaries, but that doesn't mean that non injective activations produce non linear boundaries.

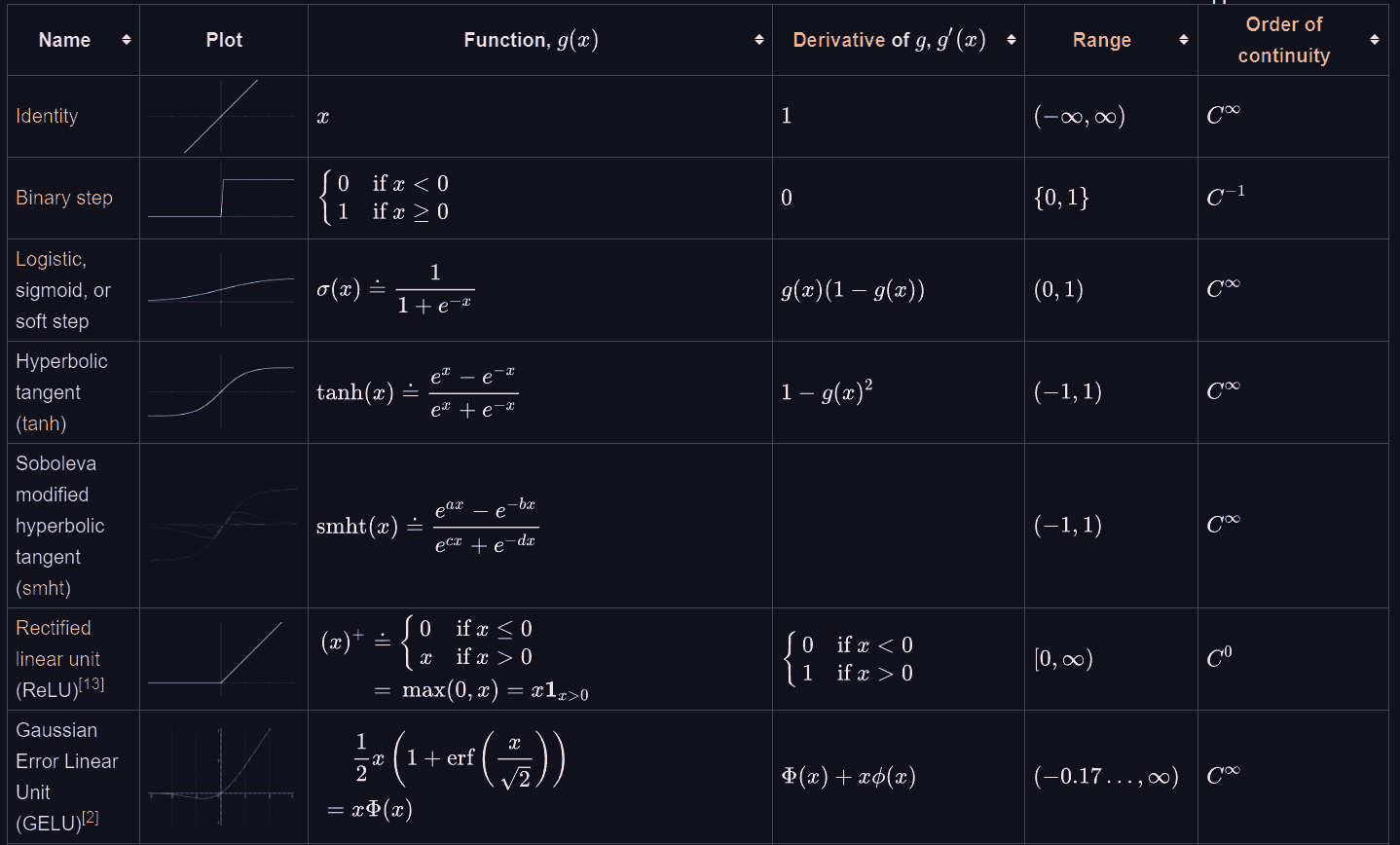

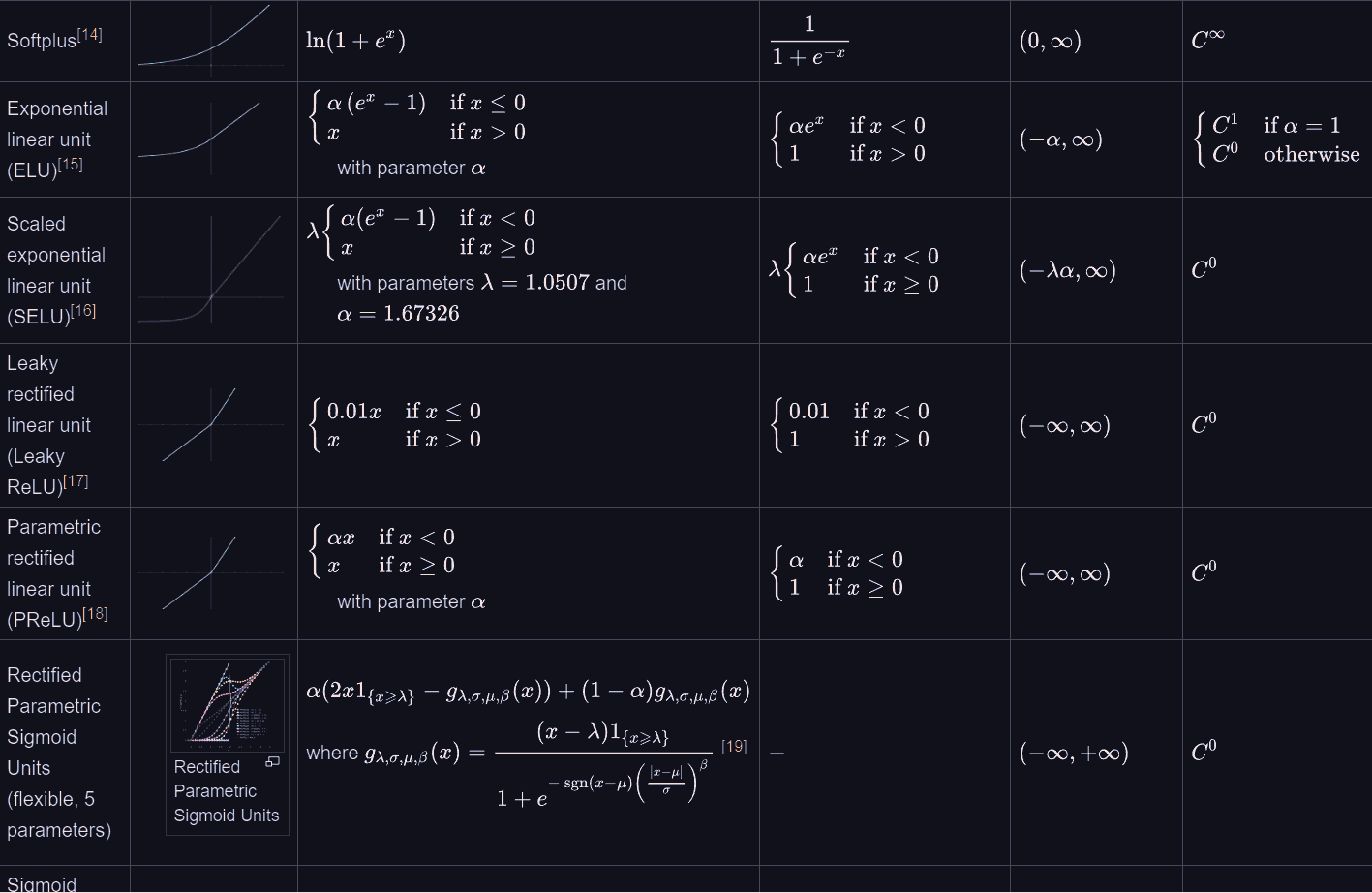

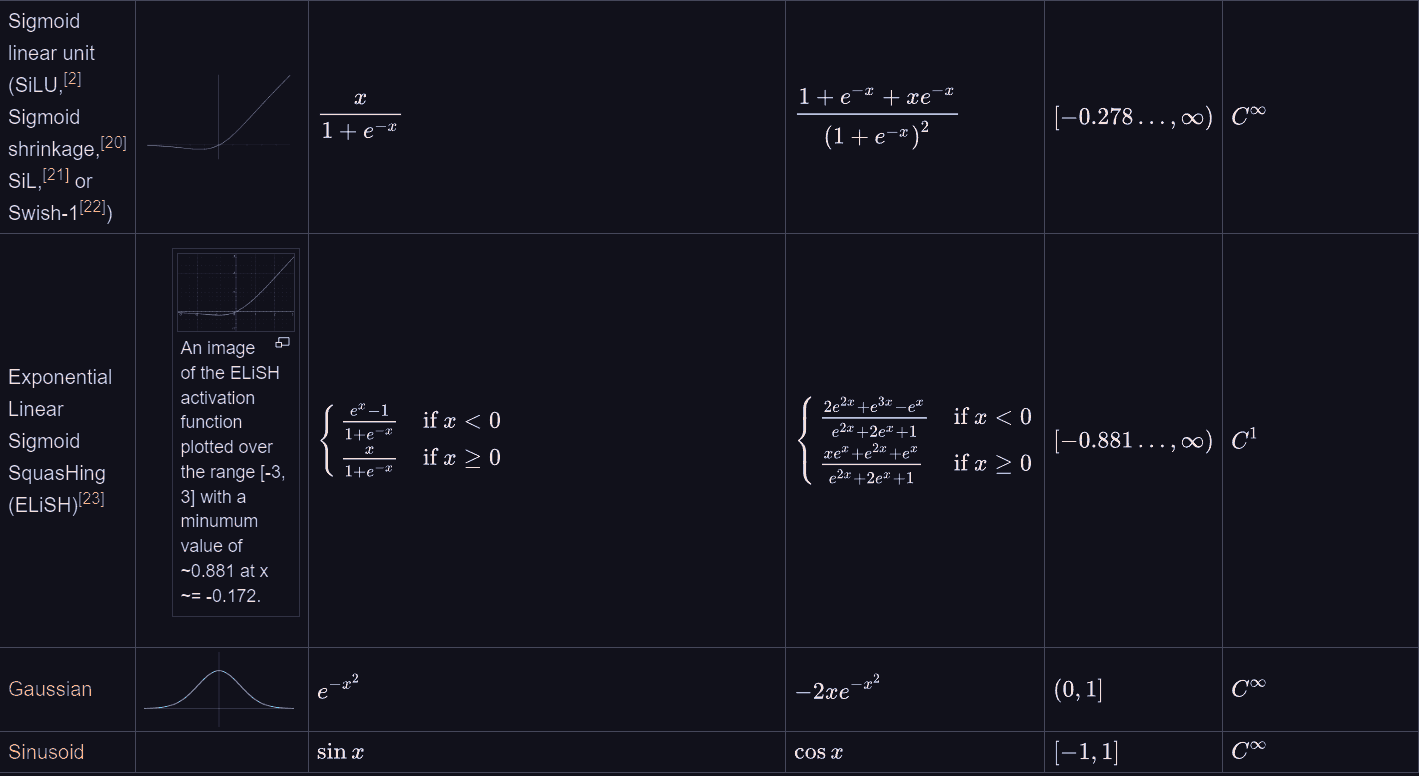

Activations for SLSO Perceptrons:

we will eventually put pictures of descicion boundraries of SLSO perceptrons on

We can then see that the