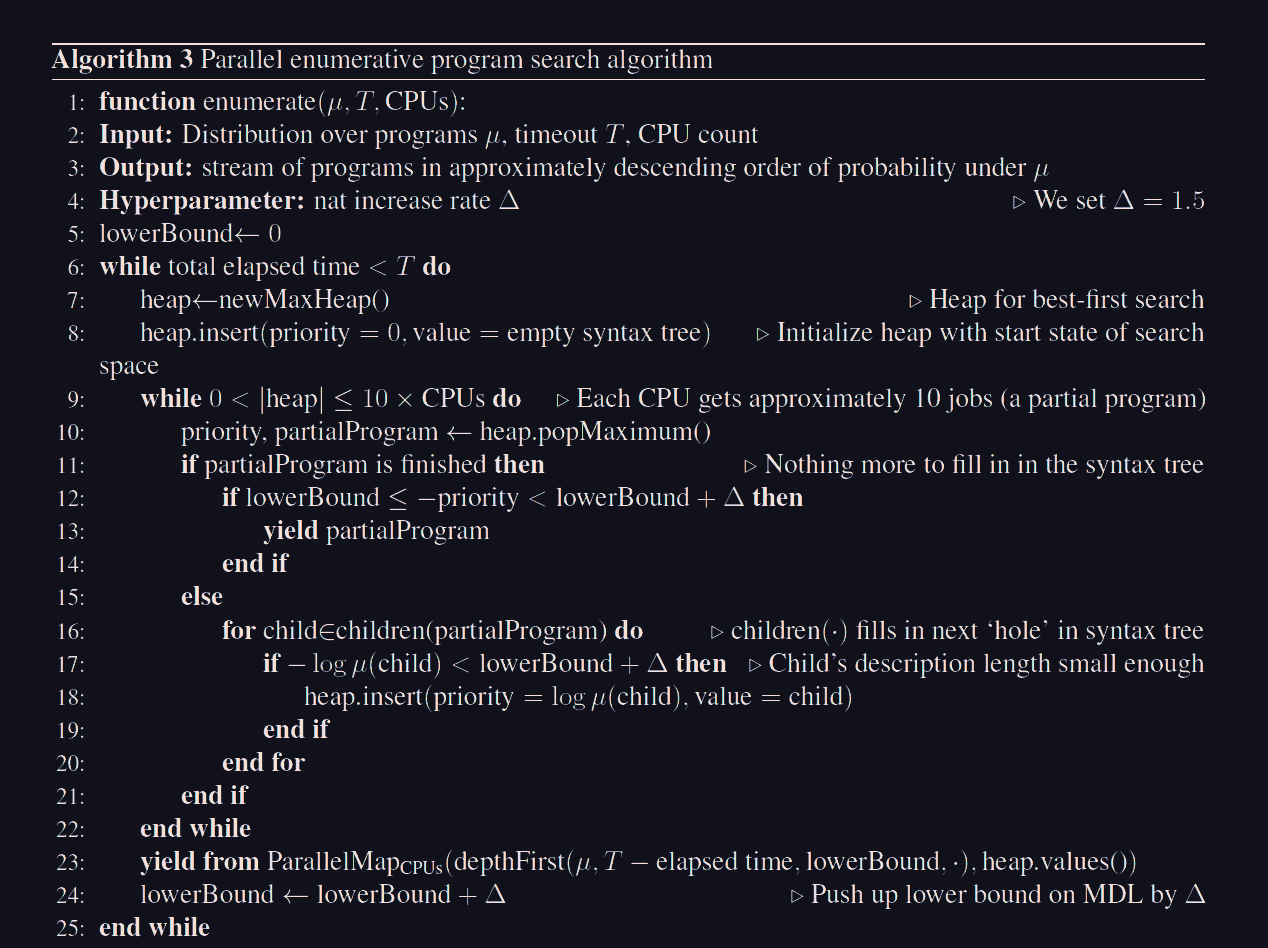

Dream Coder - Algorithm 3

Let us say you have a sample space

Similarly, an event

So less likely events carry more information when they occur. in general, if

Description of the Enumerate Algorithm

Setup and Notation

Let

This measures the amount of information carried by observing

-

If

, then bit. -

If

, then bits.

The goal of the enumerate procedure is to generate programs in approximately decreasing order of probability (or equivalently, increasing order of information) under

Heap Search Phase

Initialization

-

Define a lower bound

and an upper bound , where is a hyperparameter controlling the granularity of the search. -

Initialize a max-heap, where programs are ordered by their priority, defined as

(equivalently, higher probabilities are prioritized). -

Insert the initial empty program (or syntax tree) into the heap with priority

.

Iterative Heap Processing

While the heap size is manageable (specifically,

-

Pop a program

from the heap: -

If

is complete (a fully-specified program): - Check whether

. If true, yield .

- Check whether

-

Otherwise, compute the set of children

, where each child fills in the next "hole" in 's syntax tree.

-

-

For each child

: -

Compute its information

. -

If

(i.e., is not too improbable), insert into the heap with priority .

-

If the heap grows too large (

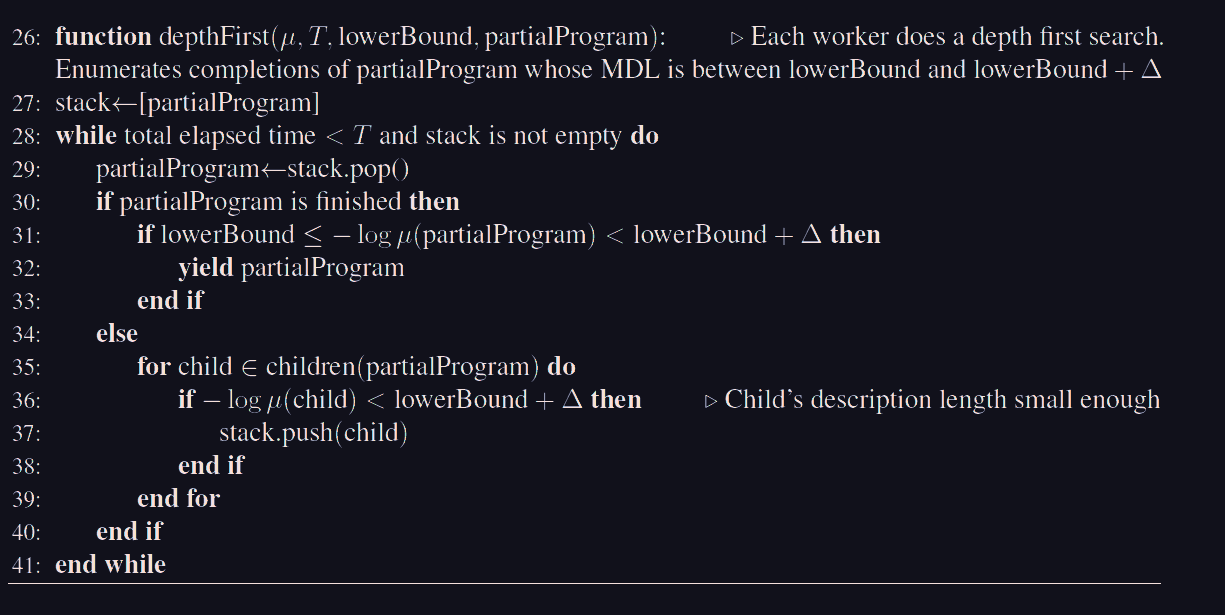

Parallel DFS Phase

Task Distribution

-

Distribute up to

programs from the heap to the CPUs for processing. Each CPU receives approximately programs (partial programs) as independent tasks. -

Set the initial search bounds for the DFS to

and .

Per-CPU DFS Procedure

Each CPU processes its assigned tasks sequentially. For each partial program

-

Perform a depth-first search (DFS) to explore all completions of

. -

When a completion

is found: -

Check if

. -

If true, yield

.

-

-

For incomplete programs:

-

Compute their children

. -

For each child

: -

Compute its information

. -

If

, push onto the local DFS stack for further exploration.

-

-

Each CPU finishes all of its

Lower Bound Adjustment

Once all CPUs complete their assigned jobs:

-

Increase the lower bound by

, i.e., set . -

Adjust the upper bound accordingly, i.e.,

. -

Reuse the same set of programs from the heap for the next DFS phase, but now search for completions with higher information (i.e., less probable programs).

Summary of the Algorithm

-

The algorithm starts with a Heap Search Phase, which uses a max-heap to explore programs with information

. -

When the heap grows too large, the algorithm transitions to a Parallel DFS Phase:

-

Each CPU explores its assigned programs in parallel, searching for completions within the current information bounds

. -

The bounds are incrementally shifted by

after each round, allowing the algorithm to progressively explore less probable programs.

-

This approach efficiently enumerates programs in decreasing order of probability while balancing parallelism and memory constraints.

A note on (MDL) or minimum description length:

Basically, the information of a program is an estimate of its MDL, that is what the upper bound is for, highly informative programs are longer and are lower probability.