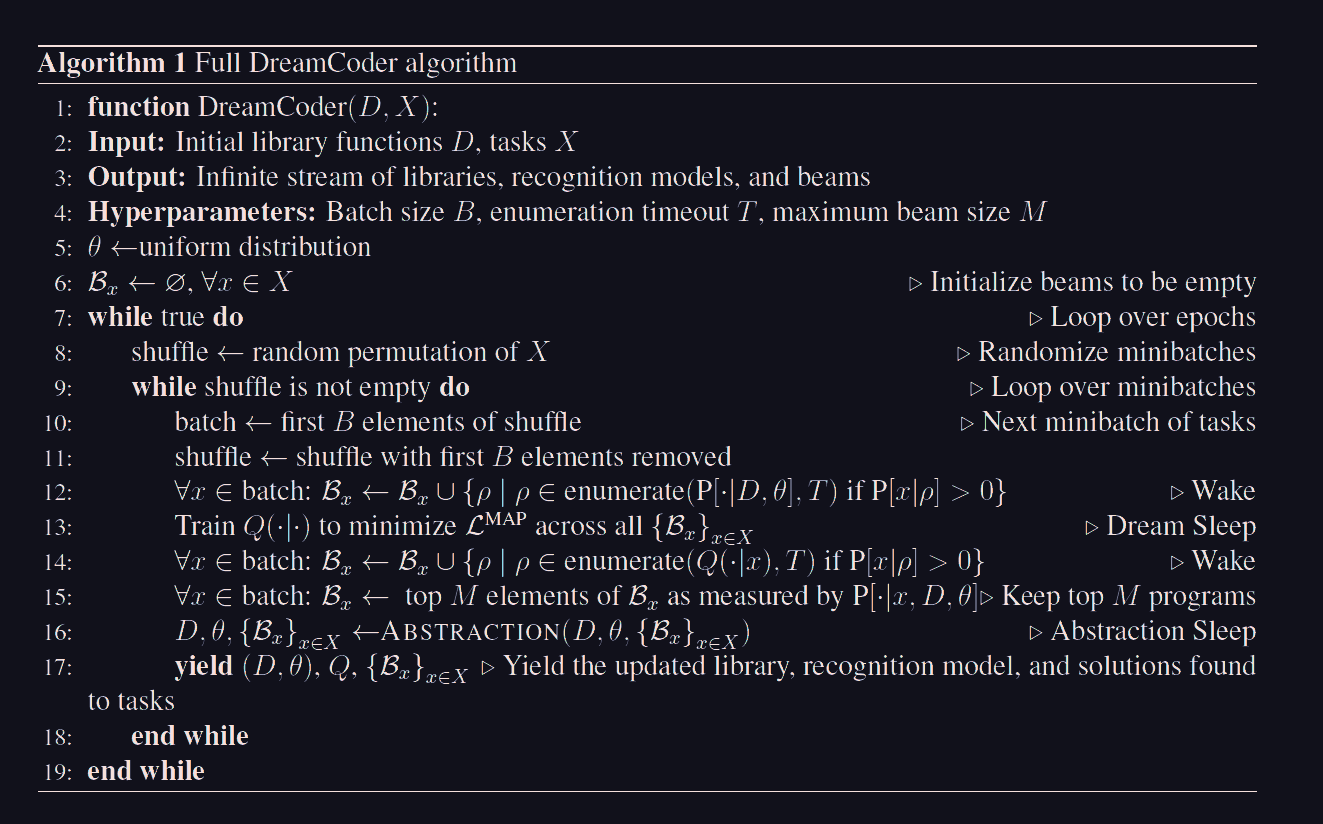

Dream Coder - Algorithm 1

-

Initialize recognition model parameters:

We initialize the parameters of the recognition model, denoted as, randomly from a uniform distribution. This parameter governs how likely a program is based on its features. -

Initialize beams for each task:

For each task, the beam (a prioritized list of candidate programs) is assigned the empty set . -

Epoch loop:

We enter an infinite loop over epochs. This allows the algorithm to continually refine its outputs, and it can be terminated at any point if desired. -

Random task permutation:

The variable shuffle is assigned a random permutation of the tasksto introduce stochasticity and avoid deterministic behavior. -

Minibatch loop:

While shuffle is not empty, the algorithm loops over mini-batches of tasks, ensuring that tasks are processed in manageable subsets. -

Batch selection:

A batch is extracted from shuffle, consisting of the firsttasks. This batch will be processed together during the "wake" phase. -

Update shuffle:

The firstelements are removed from shuffle, preparing it for the next iteration. -

Enumeration (wake phase):

The function enumerate is an abstract routine that takes:- A program distribution (e.g.,

) that assigns probabilities to programs, - A timeout

that limits the computation,

and returns programs in approximately decreasing order of their probability.

For each task, the beam is updated by adding programs if the conditional probability , meaning successfully solves the task.

- A program distribution (e.g.,

-

Training the recognition model (Dream Sleep phase):

The recognition modelis trained to minimize the loss , which is the negative log-likelihood of the programs in the beams . This phase updates the recognition model to better predict programs for tasks based on the current beams. -

Re-enumeration (Wake phase):

After updating the recognition model, we repeat the enumeration step for each task, this time using the updated recognition model . Specifically, for each task, we update the beam by adding programs sampled from the distribution , subject to the condition . This step integrates learning from the recognition model into the beams. -

Prune beams:

For each task, we prune the beam to keep only the top programs, as measured by the joint probability . This ensures that the beam remains computationally manageable by focusing only on the most promising programs. -

Abstraction Sleep phase:

The ABSTRACTION function takes as input the current library, , and beams . It identifies useful subprograms across tasks and adds them to the library , allowing the system to "abstract" common patterns into reusable components. This phase improves the efficiency and expressiveness of the library over time. -

Yield updated components:

The updated library, recognition model , parameters , and the beams are yielded back to the user or tasks. This allows for external inspection or use of the current state of the system. -

Continue looping:

The process repeats for the next epoch, incorporating new discoveries, improved recognition models, and a refined library.